The role of libraries and librarians in artificial intelligence: What is a Machine Learning Librarian?

How can a Machine Learning Librarian make machine learning better?

What is the potential impact of AI and machine learning on libraries?

Almost every industry asks, “How will artificial intelligence impact us?”. Answers to this question vary; some discuss vast opportunities and untapped potential, while others express deep existential concerns.

Libraries (and related institutions) are also asking this question. A core raison d'être for libraries is providing access to high-quality information. Large Language Models and other associated technologies claim to have a similar goal, so, unsurprisingly, libraries are thinking hard about what impact this technology will have on the sector and how best to respond. This is a question worth grappling with. However, in this post, I want to turn this question around and ask: “what is the potential impact of libraries on AI?”

What is the potential impact of libraries on AI: What is a Machine Learning Librarian?

In January this year, I took on a new job title, “Machine Learning Librarian” at Hugging Face. As far as I am aware, I am the only person with this job title – I’d love to know if there are more of us out there! I wanted to talk more about what I am trying to accomplish in this role and how it relates to broader possibilities for libraries and people with library backgrounds to impact the machine learning community.

What is Hugging Face?

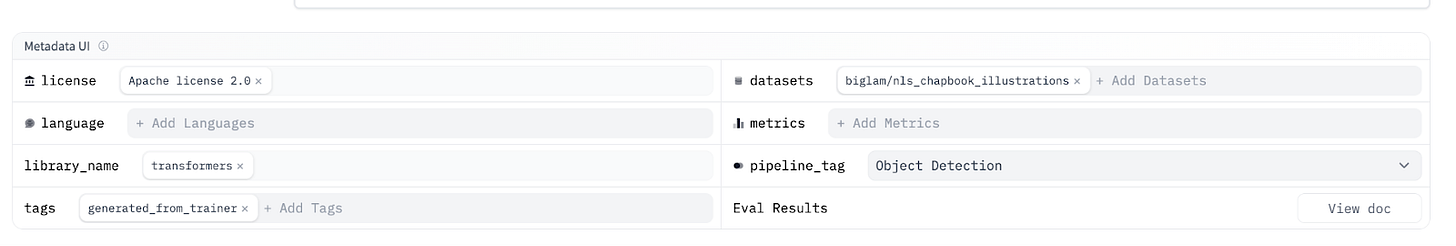

Hugging Face is a startup focused on democratising good machine learning. Hugging Face wants to empower more people to use and develop machine learning in a way that makes sense for their particular use case. Practically, this is done in a variety of ways: creating open-source software libraries, carrying out research into the potential ethical harms from machine learning and, importantly for this post, hosting a Hub which holds over 300,000 machine learning models, 62,753 datasets and 1000s of machine learning applications and demos. In this post, I will discuss one strand of my work: improving the metadata for these machine learning models, datasets and Spaces.

What is metadata and why is it necessary for machine learning?

Metadata also known as ‘information about data’ can take various forms. Some metadata follows a specification or schema such as MARC, whilst other metadata is more loose, for example user generated tags. Essentially metadata are some more or less structured way of describing things, in this case machine learning artefacts.

Metadata may not sound like the most exciting topic at face value. However, metadata is essential for a healthy machine learning ecosystem.

The Five Laws of Library Science by S. R. Ranganathan go as follows:

Books are for use.

Every person his or her book.

Every book its reader.

Save the time of the reader.

A library is a growing organism.[3]

Switch out ‘book’ for machine learning artefact and ‘library’ for Hub, and we get close to what Hugging Face is trying to achieve! And none of this is possible without metadata...

Search and discovery

For every person to find their book (or their machine learning artefact!) metadata plays an essential role. When you search for a book by your favourite author, or go to a shelf labelled ‘history’, you’re using metadata to find the right type of book to meet your needs. Metadata can fulfil the same purpose when it comes to machine learning artefacts...

Want to know how a model can be used? It’ll be important to understand the model’s licence.

Want to know which language a model is for? A language tag can help you find the right model.

Want to find models covering a particular domain? Tags can help find models for clinical applications or models focused on climate research.

Understanding the lineage of machine learning models

Many machine learning models are created by starting from an existing model and updating this model for a slightly different use case (often referred to as fine-tuning).

Having metadata for which model was used as a starting point (known as a base_model) can help us:

Understand which models are having the most impact on the ecosystem, i.e. are being successfully used by others. Base Model Explorer is an application that allows you to explore this imapct.

“Inherit” information from the parent model. For example, if we know the carbon footprint of using the parent_model, we’ll better predict the likely carbon impact of the fine-tuned model.

Know if ethical concerns with the base_model might also impact the fine-tuned model.

How can a Machine Learning Librarian help improve metadata?

We’ve covered the why of metadata, what about the how? The Hugging Face Hub is — as the name suggests — a hub! The machine learning models, datasets and demos uploaded to the Hub are largely created by the community. This has some interesting implications for how you curate and manage metadata on this platform.

Machine Learning can help with creating metadata

There is a role for machine learning in helping to create metadata for machine learning artefacts. For example, many of the datasets on the Hugging Face Hub have historically lacked metadata about the language used.

Whilst I can go into individual models or datasets and suggest improvements to the metadata and documentation, this doesn’t scale well when the Hub hosts over 300,000 models. One solution to this is to leverage machine learning to help extend what a single person can do. This is also where the ‘machine learning’ part of ‘Machine Learning Librarian’ comes to the fore.

Librarian Bots

Since starting at Hugging Face, I’ve been exploring using machine learning models to try to fill in some of these gaps, such as predicting which language should be associated with a particular dataset. This work was done via a Librarian-bot which is a bot user on the Hugging Face Hub which makes suggestions to users about improving metadata. These suggestions are made using a variety of techniques, some relatively simple and involving no machine learning, whilst other approaches utilise machine learning models to help fill in useful metadata for a machine learning model or a dataset.

Accepting these suggestions is low effort for the creator of the dataset but helps ensure people can find datasets that are relevant to the languages they want to work with. You can read more about this work here.

Automation and new tools are an important part of the picture

Whilst, as demonstrated above, improving metadata for models and datasets 'retrospectively’ can be done, it usually makes most sense to create this metadata as early as possible. Many of the open source libraries created by Hugging Face such as Transformers already create model cards automatically. Model cards are one tool used for documenting machine learning models. These cards consist of a template which aims to ensure relevant parts of a model are clearly documented i.e. the potential biases of a model and a discussion of the training data used to create the model.

More recently tools like Lilac have been introduced to help better understand machine learning datasets. Potentially tools like these could be expanded to help create metadata when users upload datasets to the Hugging Face hub. Similarly, tools like Argilla which help users create training data could help deliver better metadata to the Hub as datasets are created. Fostering discussions about why and how this automation of metadata creation can be done, as well as building understanding of metadata’s importance for the machine learning ecosystem, are key roles of a Machine Learning Librarian.

What else?

This is the first in a series of posts I plan to write about libraries and AI. I plan to talk in more detail about how I think about the role of Machine Learning in creating metadata and when and where this should and shouldn’t be used. As part of this I’ll also talk about some approaches I think libraries should consider when introducing machine learning into their workflows.

I would love to know if there are topics you think are particularly important in relation to this!