Fixing search with AI?

How machine learning can help improve existing search

In this post, I will take a break from the series on ‘metadata for machine learning’ and turn instead to how machine learning can aid in search.

There has been enormous interest in applying AI and machine learning to search. At the most bullish end of the scale, AI, and in particular Large Langue Models, are seen as mechanisms for entirely replacing the traditional approaches to search; instead of using a search system to retrieve relevant results from some database (or the web), the system should instead directly respond to a search. Instead of taking the query “recipe for vegan haggis” and retrieving documents likely relevant to this, the LLM should directly respond.

Some people have analogised how this works internally to a form of search, with the critical difference being that the search is not taking place against some external documents but rather ‘internally’ within the model's weights. Whether this analogy is valid or not (I don’t think it is) is beyond the scope of this post.

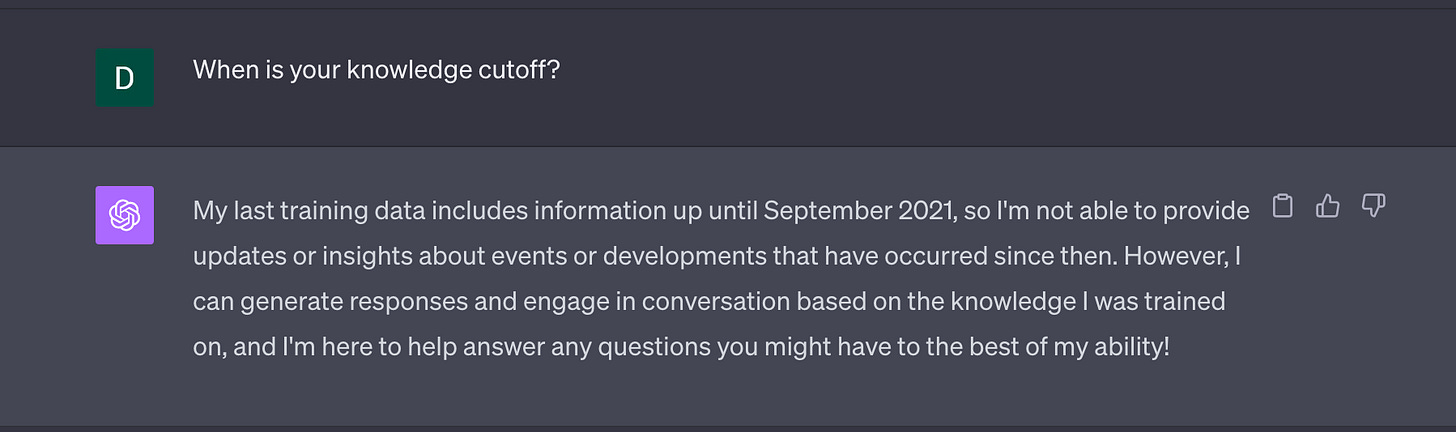

One of the often-mentioned limitations of this approach is that LLMs can become outdated. The world is constantly changing, and the language used to refer to the world also changes; new words are introduced, new concepts and facts emerge, and there are slow shifts in what someone is trying to communicate when using a particular series of words. This can all cause trouble for a LLM. The ‘knowledge’ cut-off of an LLM can make itself very apparent when treating an LLM as a search system.

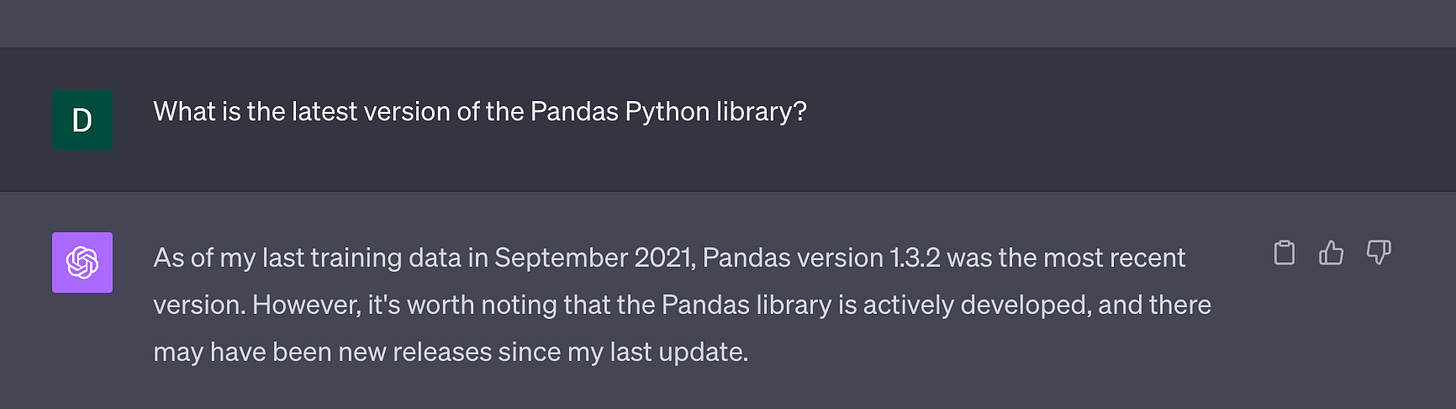

As a concrete example, ChatGPT doesn’t know much about 2023. If you ask ChatGPT about the Python library Pandas, it is only aware of this library up to version 1.3.2. As of today, the most recent version of this library is version 2.1.1.

With this significant version bump, some things which worked in previous versions no longer work, and there are also changes to the internals of Pandas which ChatGPT won’t ‘know’ about.

One approach to get around this is to give LLM-based systems access to the internet. This helps overcome this knowledge cutoff, although even this doesn’t always work successfully.

There are a bunch of other areas where LLMS and machine learning are impacting search: Retrieval Augmented Generation, long-context embeddings, multimodal search, etc. However, many of these systems are challenging to personalise beyond the option to try and manipulate parts of the system through prompt engineering or fine-tuning the model.

In the rest of this post, I instead want to briefly discuss an example of another approach to leveraging machine learning to assist search. Whilst my example is very specific, I believe this approach could be relevant to many applications where an existing search system is falling short and you have limited resources to improve this situation through machine learning.

What's the problem with traditional search?

I try to keep track of developments in machine learning. As anyone else trying to do this knows, this is almost impossible; there is a firehose of new information to asses for relevance, quality and applicability to your needs. There is one area of ML which I am mainly seeking to keep track of, which is the release of new datasets; specifically, I want to keep track of new datasets being introduced in papers on the arXiv preprint server, where many, though not all, papers discussing datasets I am interested in are likely to be published.

How could I approach this using traditional search? I want to find papers mentioning datasets. A keyword search for ‘dataset’ seems like a sensible starting point.

If you have any experience searching library systems or databases, you can probably already see that there are better ways to do this via a more advanced search. One good way to do this would be by indicating, for example, that we want ‘dataset’ to appear in the title. This might be a better signal for whether a paper is particularly about ‘dataset’.

We can see we still have some results that aren’t about new datasets but different things to do with datasets. We could further try and manipulate our search, but it can be difficult to express as a query what we’re after since the concept we’re after, ‘new dataset’, is not easy to map onto a set of key terms directly.

How can machine learning help?

The problem we were trying to solve was complex using ‘traditional’ search techniques because of the difficulty of expressing what we want in terms of a search. Given a set of results, we can most of the time quite quickly say, ‘This is about a new dataset’ and ‘This one isn’t,’ i.e. it’s pretty easy for us to show what we want but much more challenging for us to express what it is we want.

Since we can easily create examples of what we want, creating a dataset with these examples should also be possible. This could provide a training set for a machine learning model that does the task we’re trying to achieve.

The myth of a lot of data

Whilst it might be nice that we can conceive of labelling a few examples of our data, we might still be concerned with how much we’d have to label to get some good results. If we need to label many examples, we’re not saving time by annotating a dataset to train a machine learning model.

This is also one of the reasons LLMS and, in particular, chat tools like ChatGPT are so popular; they promise you won’t need to create training data.

SetFit: Efficient Few-Shot Learning Without Prompts

This is where the SetFit library/approach might offer us another alternative approach that doesn’t rely on larger amounts of annotated data. SetFit

achieves high accuracy with little labeled data - for instance, with only 8 labeled examples per class on the Customer Reviews sentiment dataset, SetFit is competitive with fine-tuning RoBERTa Large on the full training set of 3k examples

I will write a separate post which goes more in-depth into the technical parts of how this works, so, for now, I’ll focus a little bit on the steps required so you might have a better sense of whether this approach would work for you.

Labelling data

The first thing I needed was some data. arXiv provides an API, and I used a Python wrapper to download metadata for papers based on a search for “dataset” in the title of papers on ArXiv. This metadata includes the title and the abstract for the paper.

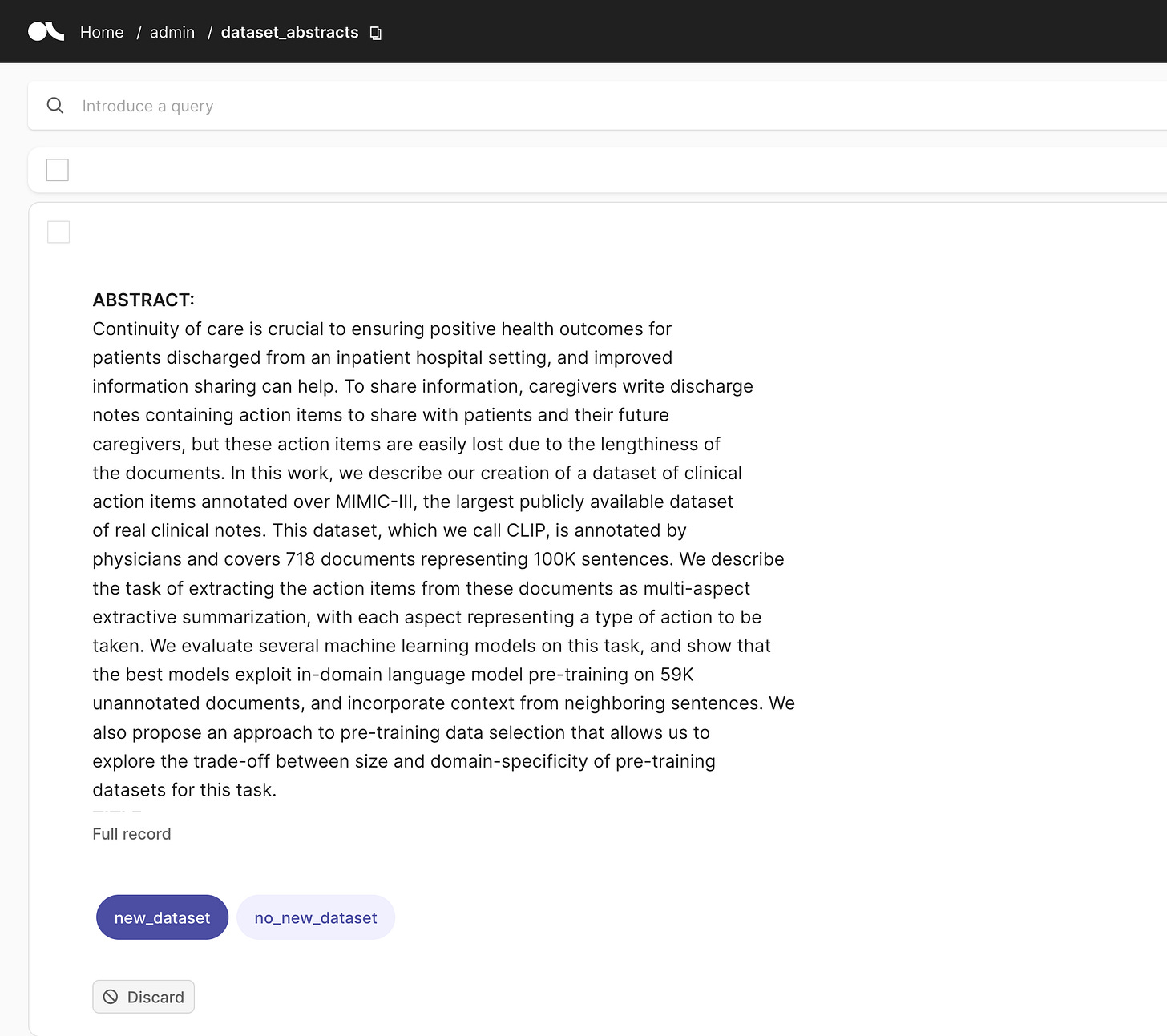

We next label some examples; in my case, I ended up labelling around 150 examples. I did this using Argilla, which provides an excellent interface for bulk labelling. This means that although 150 sounds like quite a lot of data to label, I was, for the most part, able to label this data quickly.

Training a model

Unfortunately, this part still requires some hands-on coding. However, the SetFit library is very user-friendly, so you shouldn’t have too much trouble if you are familiar with Python. Using some of the data I labelled as training data and some as validation, I could train a model with 91% accuracy. All this model does is map an input abstract + title to one of two labels: ‘new dataset’ or ‘no new dataset’, but this simple model does exactly what I want without me having to handcraft some rules to identify all the possible patterns for what might indicate an abstract or title refers to a new dataset.

This model could be improved relatively quickly, but it already gives a good starting point for filtering to a better set of papers that more closely fit what we’re looking for.

Interface

Now I have a model. The next step was to create a simple interface to filter the initial arXiv search query using my new model. This interface is pretty simple but exposes most of what I need.

The nice thing about this approach is that a large part of the heavy lifting can be done by arXiv. Do you want to filter by the arXiv category? Use the existing infrastructure. Do you want to filter to include a date range? Use the existing infrastructure. Want to specify other more complex search strategies? You guessed it, you can use the existing infrastructure.

Conclusion

If you don’t have the time or the money to implement a new approach that fundamentally changes how you offer people search, there may still be scope for something which can sit on top of an existing search interface to help refine those results.

Traditional search interfaces can be limited; they have struggled to provide users with a way to express all the information they might seek. Approaches like semantic search and RAG offer many opportunities, but they also come with a high cost of implementation. Whilst more ‘traditional’ databases provide semantic search functionalities, these aren’t always trivial to set up. If you don’t have the time or the money to implement a new approach that fundamentally changes how you offer people search, there may still be scope for something which can sit on top of an existing search interface to help refine those results. This approach will likely be a lot less expensive to implement, and it can often be relatively quick to experiment with this approach to filtering search queries.

If you have a search-related use case that could benefit from this approach, I’d love to hear from you!